Code Europe: exploring Machine Learning within Open Source

In the bustling halls of the Code Europe conference, we had the privilege of taking the stage as a conference speaker. Beyond the honor and excitement of sharing our expertise, the true magic of this experience unfolded during the moments of connection and collaboration that happened offstage. In this blog post, we invite you to join us on a captivating journey through the power of learning and networking at the conference, where minds met, ideas merged, and new opportunities were born.

Thoughtful and insightful conversations about Machine Learning in production, Hacking, Open-Source oriented business models, and building Developer ecosystems came naturally. Besides, sharing our experience contributing to the DVC VSCode extension experimentation tool.

Project TEMPA. Hacking Teslas for Fun and no Profifigure1

Fig 1. TEMPA project logo. One of the most updates presentations here. Github project here

One of the most intriguing sessions we attended was a talk that unveiled the fascinating world of hacking Teslas by Martin Herfurt. The speaker, an expert in cybersecurity, shared insights into the vulnerabilities within Tesla's systems and demonstrated how ethical hacking plays a vital role in enhancing automotive security. It was eye-opening to witness the intricate techniques used to uncover potential vulnerabilities and see how he has contributed to the overall security landscape by sharing his insights with the OpenSource community.

Discovered during our conversation with Martin that his motivation stemmed from a deep sense of curiosity and a background in Bluetooth technology - in fact, individuals would even turn off their Bluetooth when he was around - He was particularly interested in exploring the interface that Tesla offered.

While getting started with the project was relatively easy, there were still challenges to overcome. Martin found it relatively simple to access and extract proto-buff definitions. However, the code was often obfuscated, requiring a keen sense of discernment to identify references and understand their context. Besides, inferring from the signature some of the intricacies.

The TEMPA project goes beyond inspiration, and meeting Martin has been one thing that we will take always in our brain and heart from this conference. Beyond his fascinating project, we had the opportunity to share how important still it is to bring explorations and the maker-mentality to conferences.

→ Large language models on your own data

Deepset.ai´s mission is to reduce time to market to always building NLP - enabled products faster. It helps developers by providing them with tools to deploy their language-oriented business problems. It covers the last mile using ad hoc documents for mainly Q&A tasks, integrating with other semantic search tools such as QDrant.

The approach that they use for this is quite interesting: pre-trained LLMs models that bring out the reasoning and domain knowledge that is extracted from semantic search coming from a set of documents that you can provide for creating the Q&A product. They are living growth due to the large language models explosion scenario. Besides, their compromise with Open Source is quite tangible with their HayStack Open-Source Library.

Deepset.ai´s mission is to reduce time to market to always building NLP - enabled products faster

Dimitry gave a good talk and explored this Q&A scenario with a Demo that showcased a scenario before/after the Silicon Valley Bank crash. We had the opportunity to launch together and share some thoughts about the role of contributors in the open-source space and the ecosystem players.

→ Next-gen CI/CD with Gitops and Progressive delivery

We lived an insightful, warm, and entertaining yet didactic talk with Kevin, exploring GitOps with RedHat. For us, one of the best tools presented was OpenShift Pipelines, a serverless CI/CD system that runs pipelines with all the required dependencies in isolated containers to automate deployments across multiple platforms by abstracting away the underlying implementation details, which reminded me of the pipelines schema that we use in Machine Learning within MLops practices. The talk assistants were playing a race game together divided into 2 teams, and later Kevin deployed a new feature in Openshift so we could play the game with a transformed mechanic! He guided us toward code and .yaml configuration files.

Fig 2. GitOps uses git repositories as a single source of truth to deliver infrastructure as code. Submitted code checks the CI process, while the CD process checks and applies requirements for things like security, infrastructure as code, or any other boundaries set for the application framework. Know more about how Red Hat sees GitOps here

We also explored together the intricacies of Progressive Delivery, which entails a time and/or percentage incremental release process that allows developers to measure how well a release is going through different metrics. Kevin exposed the reasons why this might actually be worth it: from Deploying & Releasing to Production faster to decreasing downtime and also Limiting some ‘tragedies’ plus less mocking or setting up unreliable ‘fake’ services.

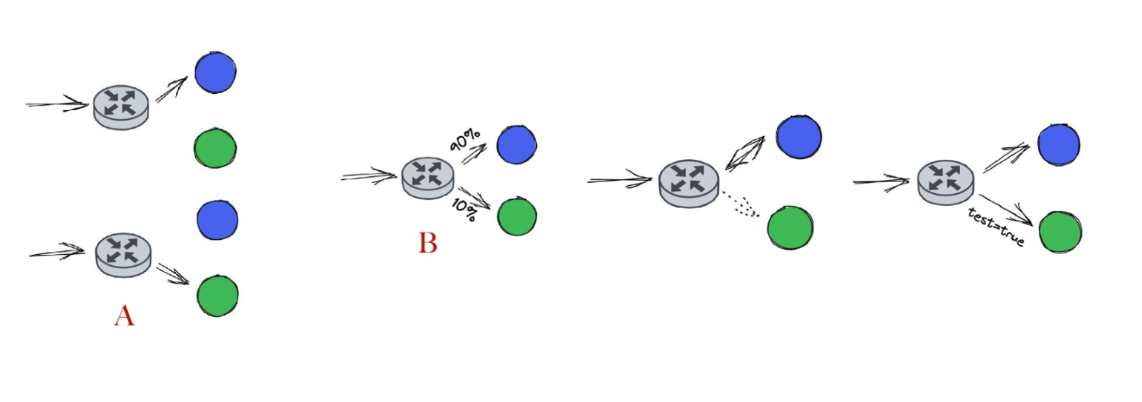

In a non-progressive scenario, que could approach releases with a green-blue button schema in which we face an all-or-nothing scenario (A), and what we do when things don´t go as expected is have a quick rollback. In the progressive scenario, a small percentage can be exposed to the release, and we can increase based on data-driven decisions, what we call the (B) Canary releases. A few different approaches open here with this mental model: we can do mirror traffic, in which without the new release being used, we can derive traffic to measure performance. We can also use the Dark Canary approach, in which we subselect a set of users based on some criteria (New users, or heavy ones) and we test how the release is going. Another progressive approach is to be Feature flags oriented with respect to a given condition.

Fig3. Schemas coming from Kevin Dubois slides. Figure A explains the Blue Green Deployment, and Figure B explores Canary Releases with their different variants.

When we think about how we could apply this in Machine Learning in a production scenario, we could think about releasing a newly trained model based on new data, and how could we use Progressive Delivery to measure things beyond inference performance, like data drift or model drift without putting into risk or reducing the possible negative impact from the derived decisions.

Sharing some time with Kevin beyond the talk was great and had the opportunity to ask him some questions about the sustainable mindset for contributors in the open source space and talk about DevRelism too. Glad we find common ground in a fantastic reading like this one.

The Lord of the Words: The Return of the Experiments with DVC

Fig4. The Transformers Architecture at various levels. First seen as a black box, with their main submodules, and the self attention mechanisms.

(Author Gema Parreño)

The Lord of the Words Talk had as a main objective to explain the Transformers Neural Network architecture inside of the context of translation and share the Open-Source exploration with DVC Extension for VSCode, with some narrative storytelling based on The Lord of the Rings.

With its innovative attention mechanism inside its architecture, Transformers have emerged as a critical breakthrough in the field. From the attention is all you need paper until these days , this architecture has aged well and together with the data explosion and the hardware advancements, gave us advancements that have overcome the state of the art in terms of speed and accuracy in several tasks.

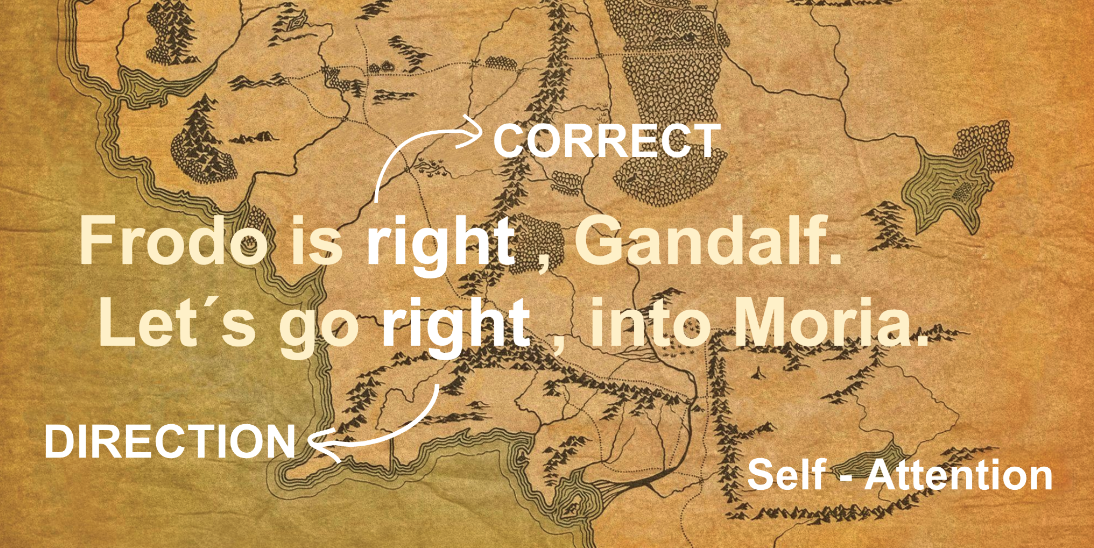

In a straightforward manner, the Attention mechanism could be seen as a data dependent transformation of a sequence with a scale-dot product operation that allow us to see and process the context in a sequence in an innovative manner. Inside the context of Neural Machine Translation scenario, the derivative produces what we call the attention heads, that map with a score the relationship in between the elements of the two sequences. With multi-head attention, we scale attention, and we repeat that operation many times finding those relationships in between the sentences. Self-attention goes one step beyond and maps the relationship in between the elements of only one sequence, finding new relationships, context dependent, inside that sequence. With these three concepts, pretty conceptually, we can understand better the difference in between Transformers and other architectures such as seq-to-seq models’ approach.

Fig5. Self attention allows the machine learning model to understand that the same word might mean different things depending on its context. Here, the word “right” refers to both correctness and direction depending on its context.

The project’s moonshot has as its main goal to explore if there is a Transformer´s architecture trained with its correspondent parameters that would translate several languages reasonably well. For that, we explored DVC Open Source VS Code extension , that basically transforms the IDE into a machine learning experimentation platform.

DVC allows you to agnostically structure your code and launch experiments without minor changes: besides, you can track the experiments with git, allowing you to persist experiments or create branches out of them. The UI offers a table and plot sections that keep track and allows us to select the best experiments and persist them and launch experiments seeing live training from there. In order to get this working, we need to define a pipeline with a dvc.yaml file that encapsulates the different stages and a parmas.yaml file that will allow us to launch different experiments, separating here the definition from instantiation principles.

During the development of the talk had the opportunity to provide feedback about the onboarding experience, find a bug, and index a roadblock, which was great! You can find more about the project here.

Many conversations came thereafter the talk with participants and other speakers about the rapidly growing open source scenario of Machine learning in the Open, including principles and mental models explored in some readings!

The conference was extremely well organized and learnt a lot. Massive congrats to all the team, and hopefully we can see each other at other time!